Imagine that you are a new homeowner, shopping for insurance for your new house. You live in an area prone to earthquakes, and you are not a big risk-taker. You decide that you should have earthquake insurance. You are on the web researching earthquake insurance policies. You come across the web site of Acme Insurance, an international leader in earthquake damage coverage. The web site says they are the best earthquake insurance company because they not only pay for earthquake damage, they have an innovative program to actually prevent earthquakes experienced by their beneficiaries. The program involves assigning an earthquake prevention coordinator (EPC) to each homeowner. The EPC does one session of telephonic earthquake prevention coaching, sends some earthquake prevention educational materials by e-mail, and makes a follow-up call to assure that the homeowner is exhibiting good earthquake prevention behaviors. This is a proprietary program, so more details are only available to Acme Insurance beneficiaries. The program is proven to reduce earthquakes by 99%. You click on the link to view the research study with the documented proof.

The study was conducted by Acme Analysis, a wholly-owned earthquake informatics subsidiary of Acme Insurance. The study begins by noting an amazing discovery. When Acme analyzed its earthquake claims for 2010, it noted that 90% of its earthquake damage cost occurred in only 10% of its beneficiaries. It noted that these high cost beneficiaries were living in particular cities. For example, it noted high earthquake claims cost in Port au Prince, Haiti for damage incurred during the January 12, 2010 earthquake there. It developed an innovative high risk classification approach based on the zodiac sign of the homeowners’ birth date and the total earthquake claims cost for damage incurred in the prior month. On February 1, 2010, they applied this risk classification to identify high risk homeowners, most of which were Libras or Geminis living in Port au Prince. They targeted 100 of those high risk homeowners for their earthquake prevention program. The EPCs sprung into action, making as many earthquake prevention telephone coaching calls and sending as many earthquake prevention e-mails as they could, considering the devastated telecommunications infrastructure in Port au Prince.

The study was conducted by Acme Analysis, a wholly-owned earthquake informatics subsidiary of Acme Insurance. The study begins by noting an amazing discovery. When Acme analyzed its earthquake claims for 2010, it noted that 90% of its earthquake damage cost occurred in only 10% of its beneficiaries. It noted that these high cost beneficiaries were living in particular cities. For example, it noted high earthquake claims cost in Port au Prince, Haiti for damage incurred during the January 12, 2010 earthquake there. It developed an innovative high risk classification approach based on the zodiac sign of the homeowners’ birth date and the total earthquake claims cost for damage incurred in the prior month. On February 1, 2010, they applied this risk classification to identify high risk homeowners, most of which were Libras or Geminis living in Port au Prince. They targeted 100 of those high risk homeowners for their earthquake prevention program. The EPCs sprung into action, making as many earthquake prevention telephone coaching calls and sending as many earthquake prevention e-mails as they could, considering the devastated telecommunications infrastructure in Port au Prince.

The program evaluation team then compared the rate of earthquakes exceeding 6.0 on the Richter scale and average earthquake damage claims for those 100 people for the pre-intervention period vs. the post intervention period. Among the 100 beneficiaries targeted by the program, the average number of major earthquakes plummeted from 1 in the pre-intervention period (January, 2010) to 0 in the post-intervention period (March, 2010), and the number of minor earthquakes (including aftershocks) dropped from 20 down to just 10. But the program was not just good for the beneficiaries wanting to avoid earthquakes. It was a win-win for Acme Insurance. Earthquake damage claims had dropped from an average of $20,000 per beneficiary during the January, 2010 pre-intervention period to an average of just $200 for damage incurred during the post-intervention period in March, 2010, when two of the targeted beneficiaries experienced damage from an aftershock. The program effectiveness was therefore 1 – (200/20,000) = 0.99. That means the innovative program was 99% effective in preventing earthquake damage claims cost. After considering the cost of the earthquake prevention coordinators and their long-distance telephone bills, the program return on investment (ROI) was calculated to be 52-to-1. The program was a smashing success, proving that Acme Insurance is the right choice for earthquake coverage.

Can you spot the problem? Can you extrapolate this insight to the evaluation of health care innovations such as disease management, care coordination, utilization management, patient-centered-medical home, pay-for-performance, accountable care organizations, etc.?

The problem is called “regression toward the mean.” It is a type of bias that can affect the results of an analysis, leading to incorrect conclusions. The problem occurs when a sub-population is selected from a larger population based on having extreme values of some measure of interest. The fact that the particular members had an extreme value at that point in time is partly a result of their underlying unchanging characteristics, and partly a matter of chance (random variation). Port au Prince, like certain other cities along tectonic plate boundaries, is earthquake prone. This is an unchanging characteristic. But, it was a matter of chance that a major earthquake hit Port au Prince in the particular month of January, 2010. If you track Port au Prince in subsequent months, their theoretical risk of an earthquake will be somewhat higher because it is still an earthquake prone area. But, chances are that, in any typical month, Port of Prince will not have a major earthquake.

An analogous effect can be observed when you identify “high risk” patients based on having recently experienced extreme high rates of health care utilization and associated high cost. The high cost of such patients is partly driven by the underlying characteristics of the patients (e. g. age, gender, chronic disease status), and partly based on random chance. If you track such patients over time, their cost-driving characteristics will lead them to have somewhat higher costs than the overall population. But, the chance component will not remain concentrated in the selected patients. It will be spread over the entire population. As a result, the cost for the identified “high risk” patients will decrease substantially. It will “regress” toward the mean. With high risk classification methods typically used in the health care industry, my experience is that this regression is in the 20-60% range over a 3-12 month period, without any intervention at all. Of course, the overall population cost will continue to follow its normal inflationary trend line.

This regression-toward-the-mean phenomenon has been at play in many, many evaluations of clinical programs of health plans and wellness and care management vendors. Sometimes unwittingly. Sometimes on purpose. Starting back in the 1990s, disease management vendors were fond of negotiating “guarantees” and “risk sharing” arrangements with managed care organizations where they would pick high risk people and guarantee that their program would reduce cost by a certain amount. Based on regression toward the mean, the vendor could rely on the laws of probability to achieve the promised result, regardless of the true effectiveness of their program. The vendor would get their negotiated share of the savings. Good work if you can get it. It lasted for a few years until the scheme was widely discredited. But not widely enough, it appears. Wellness and care management vendors still routinely compare cost before and after their intervention for a cohort of patients selected based on having extreme high cost in the pre-intervention period. Health plans and employers eat up the dramatic savings numbers, happy to see that the data “proved” that they made wise investments.

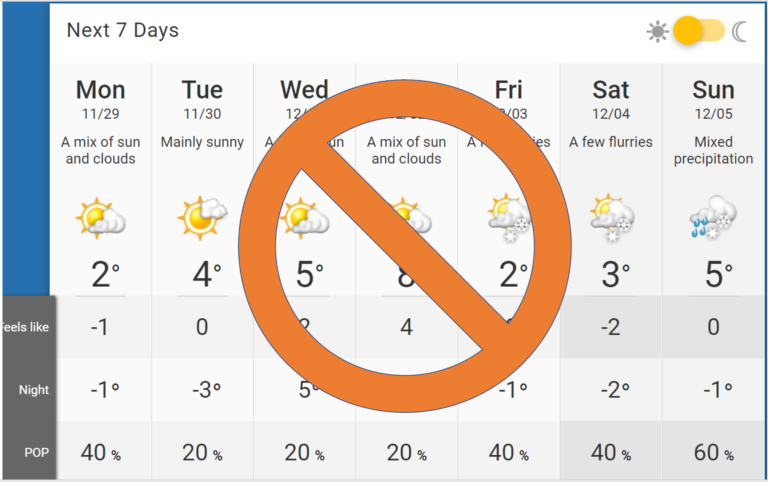

Study designs suffering from obvious regression-toward-the-mean bias will usually be excluded from publication in peer-reviewed scientific journals. But, they do show up in less formally-reviewed clinical program evaluations by hospitals and physician organizations. For example, in a recent analysis of a patient-centered medical home (PCMH) pilot, the authors concluded that the program had caused a “48 percent reduction in its high-risk patient population and a 35 percent reduction in per-member-per-month costs” as shown in the following graphic.

In this PCMH program, a total of 46 “high risk poly” members were selected based on having high recent health care utilization involving 15 or more health care providers. The intervention consisted of assigning a personal health nurse that developed a personal health plan, having a personal health record (based on health plan data), and providing reimbursement for monthly 1-hour visits with a primary care physician. The analysis involved tracking of the risk category (based on health plan claims data) and the per-member-per-month (PMPM) cost for the cohort of 46 patients, comparing the pre-intervention period (2009) to the intervention period (2010). I’m sure the program designers and evaluators for this PCMH pilot are well meaning and not trying to mislead anybody. I share their enthusiasm for the PCMH model of primary care delivery. But, I think the evaluation methodology is not up to the task of proving whether the program did or did not save money. Furthermore, even with a better study design to solve the problem of regression-to-the-mean bias, the random variation in health care costs is far too large to be able to detect even a strong effect of a PCMH program in a population of only 46 patients. Or even 4,600 patients for that matter. I’d guess that proper power calculations would reveal that at least 46,000 patients would be required to have a chance of proving PCMH effectiveness in reducing cost.

So, how do you solve the problem of Regression Toward the Mean?

As with any type of bias, the problem is with the comparability of the comparison group. The gold standard study design is, of course, a randomized controlled trial (RCT), where the comparability of the comparison group is assured by randomly assigning subjects between the intervention group and the comparison group (also called the “control group”).

If randomization is not possible, one can try to find a concurrent comparison group that is not eligible for the program and which is thought to be otherwise similar to the eligible population. The same selection criteria that is applied to the eligible population to pick targets is also used in the ineligle population to pick “simulated targets” for a comparison group. Note that in such a concurrent control design, the comparison should be between targets and the simulated targets, without considering which of the targets volunteered to actually participate in the intervention. This aspect of the design is called an “intention to treat” approach, intended to avoid another type of bias called “volunteer bias.” (More on that in a future post.)

Often, the evaluators do not have access to concurrent data from an ineligible population to provide a concurrent comparison group. In such a case, an alternative is “requalification” of the population in the pre-intervention period and the post-intervention period. Requalification involves using the exact same selection criteria used to pick targets at baseline and shifting it forward in time to pick a comparison group. The result will be a comparison group that is a different list of patients than the ones picked for the intervention. Some of the targets of the intervention may be requalified to be in the comparison group. Others will not. Some members of the comparison group will be members that did not qualify to be in the intervention group. It is counter-intuitive to some people that such an approach creates a better comparison group than just tracking the intervention group over time. But, with requalification, you are assured that the same influence that luck had in selecting people based on recent utilization will be present in the comparison group. The idea is to avoid bias in the selection process by trying to make the selection process symmetrical between intervention and comparison groups.

If I apply these remedies for regression toward the mean bias, does that mean I will be assured of reliable evaluation results?

Unfortunately, no. The bad news is that clinical programs are devilishly hard to properly evaluate. There are many other sources of bias intrinsic to many evaluation designs. And, the random variation in the measures of interest are often very large compared to the relatively weak effects of most clinical programs. This creates a “signal to noise” problem that is particularly bad when trying to evaluate small pilot programs or the performance of individual providers over short time periods.

If you really want to create a learning loop within your organization, there is no alternative to building a team that includes people with the expertise required to identify and solve thorny evaluation and measurement problems.

1 thought on “Identifying and Understanding Analysis Tricks: Regression Toward the Mean”

Pingback: Klar 3: Why it is necessary to re-qualify the population to avoid regression-to-the-mean bias in historical comparison groups