In a recent paper in JAMA Internal Medicine, Andrew Wong and his colleagues from the University of Michigan reported their “external validation” of the Epic Sepsis Model (ESM), a proprietary predictive model deployed in hundreds of hospitals in the US. The authors reported a sensational finding that thrilled people who love to hate on Epic Corporation, which is the developer of the model and the dominant electronic medical record (EMR) vendor, particularly among academic medical centers. They accused Epic’s ESM of “poor discrimination” and “poor performance.” They used impressively sophisticated methods, but only did half the job. They probably failed to do the rest of the job not because of laziness, but because they made a common error among health services researchers. They conceptualized a tool as if it was a treatment.

How does the Epic Sepsis Model work?

The ESM is a predictive model that takes pieces of data that are routinely captured and stored in the Epic EMR to produce a number called the ESM score. The ESM can be compared to a laboratory assay that produces a numeric result. They are both tests. Tests are tools. A proper and useful evaluation of a test can only be done in the context of the intended use of the test and the impact of this intended use on the relevant outcomes. Asking if a test is “effective” does not make any more sense than asking if a scalpel is effective. Obviously, that depends on what you’re planning to cut.

The Epic EMR calculates the EMS score every 15 minutes throughout a hospital admission. A higher ESM score indicates a higher probability that the patient has or is likely to develop an infection within the bloodstream — a condition called “sepsis” that is dangerous and that requires urgent antibiotic treatment. If doctors were to wait for blood culture test results to confirm the existence of sepsis, too much time would pass. Therefore, doctors initiate antibiotic treatment when they assess that the patient is likely to have sepsis. The sooner such patients can be identified, the sooner antibiotic treatment can be initiated, and the less likely the sepsis will kill the patient. The purpose of the ESM is to save lives by identifying hospitalized patients likely to develop sepsis earlier than they would have been identified by their physicians, leading to earlier onset of antibiotic treatment. But, as with laboratory tests, the ESM score itself does not directly cause the initiation of antibiotic treatment. Rather, the Epic EMR can be configured to compare the ESM score to a threshold value, and to generate an alert message when the threshold is exceeded. The alert is offered within the EMR to the physicians caring for the patient, thereby augmenting the information that is already in the physicians’ heads. The alert and the score may or may not influence the decision by the physician to order antibiotic treatment. The key point is that neither the ESM nor the alert are treatments. Therefore, they do not produce treatment outcomes that can be evaluated.

Protocols and Processes

In the medical field, there are only two things that can be evaluated or assessed:

- protocols (which are designs for care processes that are intended to produce theoretical outcomes) and

- instances of care processes that produce real-world outcomes.

Protocols and processes can be simple, or they can be complex. When a protocol or process is very simple, we may describe it as a “guideline” or “standard of care.” When it gets a bit more complex, we may describe it as a “care map” or “pathway.” When a protocol or process gets very complex, we may describe it as a “system of care” or a “care model.” In any case, the assessment of real-world process instances is accomplished by measuring the magnitude of the various outcomes that are actually produced. Ideally, the real-world process instances are carried out in the context of a randomized clinical trial (RCT), making the comparison of outcomes more convincing. If the real-world processes are just part of routine practice, the evaluator must consider whether the real-world process is being executed as designed or if the process failed to conform to the design (i.e. it is not “in control”). The assessment of protocols is accomplished by comparing them to alternative protocols in terms of the magnitude and uncertainty of the various outcomes that are expected to be produced, using mathematical models to calculate such expected outcomes.

A protocol or process may incorporate the use of a predictive model, such as by specifying the initiation of a particular treatment when a predictive model output value exceeds a particular threshold value. A predictive model is only “good” or “bad” to the degree it is incorporated into a protocol that is expected to achieve favorable outcomes or a process that is measured to achieve favorable outcomes. The same predictive model can produce favorable or unfavorable outcomes when used in different protocols or processes.

The fundamental principle that tools cannot be assessed for effectiveness also applies to papers that purport to be evaluations of the effectiveness of any decision support, lab test, telehealth sensor, information system, artificial intelligence algorithm, machine learning algorithm, patient risk assessment instrument, or improvement methodology (like lean, six sigma, CQI, TQM, QFD, etc.). Even the most respectable health services research journals are clogged with examples of papers that violate this principle with great bravado, often obscuring the violation with a thick layer of fancy statistical methods. The principle remains that tools can only produce evaluable outcomes when put to use in protocols and processes, and any tool is only effective to the degree it enables a protocol or process that produces good outcomes.

Requirements for Evaluating an ESM-based Protocol

Therefore, to evaluate the ESM, the authors needed to first clarify how they intended to incorporate the ESM into a clinical protocol. Presumably, the protocol being assessed in the Wong analysis has something to do with the decision-making process during an inpatient encounter when the clinician repeatedly decides between two decision alternatives: (1) initiating sepsis treatment or (2) not initiating sepsis treatment. But, the Wong analysis failed to flesh out some essential details about the intended protocol. Unanswered questions include:

- Did the authors intend to compare “usual care” (current practice not using any sepsis predictive model) to an alternative protocol in which the clinicians relied exclusively on the ESM alert at a threshold value of 6?

- Or did they intend to compare usual care to a protocol in which the clinicians initiated treatment when either the ESM was positive or the clinician otherwise suspected sepsis?

- Or did they intend to compare usual care to a care process in which the clinicians considered both the ESM score and all the other data elements in some undefined subjective decision-making process?

- Or did they intend to compare all four of these alternative protocols?

- Also, for the protocols that utilized the ESM, did they also intend to compare different versions of the protocol using different threshold values for the ESM alert?

In addition to clarifying the clinical protocol, the authors needed to clarify the types of outcomes they were considering to be relevant to their assessment. Unanswered questions include:

- Were they considering only deaths from sepsis, or were they also considering other health outcomes, such as suffering extra days in the hospital or suffering side effects from treatment?

- In addition to health outcomes, were they considering economic outcomes, such as the cost of treatment or the cost of extra days in the hospital?

If the authors were only considering deaths from delayed treatment of sepsis, then we already knew the answer before doing any analysis. The optimal protocol would always be to treat every patient throughout every day of hospitalization, so as never to experience any delays in treatment. No clinical judgement and no predictive model required! Obviously, if the authors were considering the use of the ESM, they must have been considering multiple outcomes, such as the cost or side-effects of initiating antibiotic therapy in patients that do not actually suffer from sepsis.

But, if there were more than one outcome being considered, then they needed to clarify how they were going to incorporate multiple outcomes to determine which protocol was the best. Unanswered questions include:

- Were they going to calculate some summary metric to be maximized or minimized?

- If so, were they going to assign utilities to the various health outcomes to allow them to generate a summary measure of the health outcomes, such as by generating a “quality adjusted life year” (QALY) metric?

- If they were considering economic outcomes, were they going to combine health and economic outcomes into a single metric, such as by generating cost-effectiveness ratios or assigning a dollar value to the health outcomes, or by asserting some dollars per QALY opportunity cost to allow the dollars to be converted to QALYs?

What the Wong study authors did instead

Unfortunately, the authors did not clarify the protocol, nor did they clarify the outcomes considered. Instead, they used an alternative method that could be utilized without taking a stand on the intended protocol or the relevant outcomes. They generated a Receiver Operating Characteristic (ROC) curve, which is a graph showing the trade-off between sensitivity and specificity of a test when different threshold values are used.

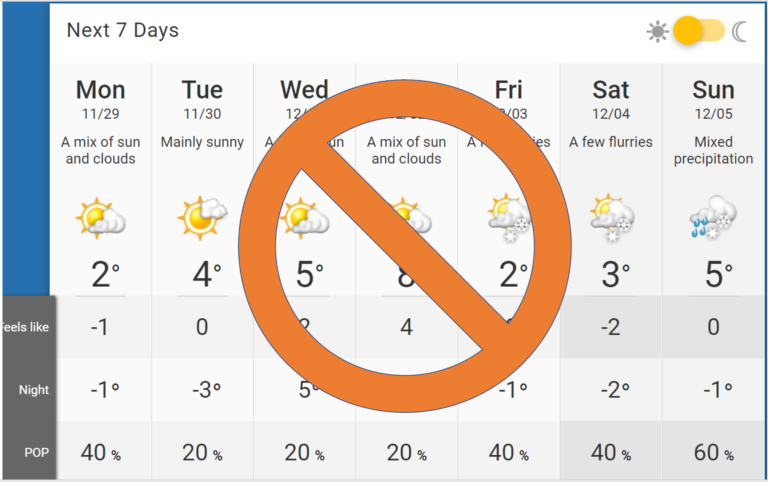

Virtually all introductory epidemiology text books describe ROC curves. A nice description is available here. As with a prior evaluation of the ESM, Wong et al calculated the Area Under the ROC Curve (AUC), asserting this metric to provide “external validation” because they considered it to be a measure of “model discrimination.” The authors clarified that the AUC “represents the probability of correctly ranking 2 randomly chosen individuals (one who experienced the event and one who did not).” Although this definition is mathematically correct, in my opinion, the AUC is more appropriately understood and used as a general reflection of the favorability of the collection of available trade-offs between sensitivity and specificity across many different values of sensitivity or specificity. “Sensitivity” is the percentage of people with the predicted condition that can be identified through a test that is “positive,” defined as a test value greater than a particular threshold. Sensitivity is about avoiding missing cases of sepsis (false negatives). At the same threshold, the “specificity” is the percentage of people that do not have the predicted condition that can be identified through a test that is “negative,” defined as a test value less than the threshold. Specificity is about avoiding false alarms (false positives). As the threshold is decreased to make a test more sensitive in detecting impending sepsis, you have to put up with more false alarms (decreased specificity). The ROC curve traces the achievable combinations of sensitivity and specificity as the threshold is varied across its range. A greater AUC means that the ROC curve is generally bowed toward the upper left, offering more favorable combinations of sensitivity and specificity. It is important to note, however, that when one test has a larger AUC than another test, it does not necessarily follow that it has a superior specificity for every value of sensitivity, nor a superior value of sensitivity for every specificity. As shown in the diagram above, the ROC curves for two tests can cross one another. Therefore, a test with a smaller AUC may actually perform better for a specific use in a clinical protocol.

So why do analysts report AUC values? Because they have not done the work to develop a decision analytic model to allow comparison of expected outcomes for alternative protocols using different predictive models with different threshold values. That’s like building a bridge part-way over the river and declaring it “done.”

So, how can the bridge be completed?

A complete analysis would compare the expected outcomes of alternative protocols. The analytic methodology appropriate to this purpose is called decision analytic modeling. In any type of analysis, it is always a good practice to start with the end in mind and work back to determine the necessary steps. I like to follow the lead of one of my healthcare heroes, David Eddy, MD, PhD. Eddy uses the term “clinical policies” to describe protocols and other designs for clinical practices. He advocates for the presentation of the results of decision analytic models in what he describes as a “balance sheet,” with columns corresponding to the policy alternatives considered and rows corresponding to the outcomes thought to be relevant and materially different across those policy alternatives. (Students of accounting will note that such a table is more conceptually aligned with the concept of an income statement than a balance sheet, but Eddy’s PhD was in mathematics, not business!). A balance sheet to compare alternative protocols for the initiation of antibiotic therapy for presumed sepsis would need to compare “usual care” (not using Epic’s ESM model), to various alternative protocols using the ESM model. The alternative ESM-based protocols could differ in terms of the threshold values applied to the ESM score, and the intended impact of the ESM alert on the ordering behavior of the physician, leading to a balance sheet that looks something like the following:

The decision analytic model should be designed to fill in the values of such a table, providing estimates of the magnitudes of the outcomes and the range of uncertainty surrounding those estimates. The calculations for such a model can be done in many different ways, such as using traditional continuous decision analytic models or newer discrete agent-based simulation models. A traditional model could be conceptualized as follows:

As shown in the diagram above, the Wong paper authors provided only some of the numbers needed. Note that in this tree diagram, some of the branches terminate with “no impact of ESM” — meaning that for the subset of patients, the outcomes are expected to be the same for usual care and the ESM protocol being evaluated. The tree diagram allows the analyst to “prune” the “no impact” branches of the tree and focus on the subsets of patients for which outcomes are expected to be different.

One might object to the fact that such outcomes calculations usually rely on some assumptions. People sometimes attack models by saying “Aha! You made assumptions!” But, as long as the assumption values are based on the current-best-available information, and the analyst acknowledges the uncertainty in those values (ideally quantitatively through sensitivity analysis), the model is serving its purpose. The assumptions are not flaws in the model. They are features. The assumptions complete the bridge across the river, supporting protocol selection decisions that need to be made now, while also pointing the way to further research to provide greater certainty for the assumptions that are found to be most influential to protocol selection decisions to be made in the future.

Reporting Number Needed to Treat (NNT) is like building a bridge almost across the river.

For decades, it has been popular for health services researchers that were not fully on-board with the principles of decision analytic modeling to go part-way by reporting a “number needed to treat” (NNT) statistic. The NNT statistic is the number of patients that need to undergo a treatment to save one life (or to achieve one unit of whatever was the primary outcome intended to be changed by the treatment). Such a statistic acknowledges a trade-off between two outcomes, or at least two statistics that are proxies for causally “downstream” outcomes. In the case of the Epic EPM evaluation by Wong et al, the authors adapted this NNT concept as a baby-step to acknowledging the fundamental trade-off between successfully identifying impending sepsis and chasing after false-alarm alerts. In this portion of their paper, they vaguely described two alternative protocols. In the first of these, the attending physicians were to ignore all but the first ESM alert message, and do some unspecified sepsis “evaluation” process for patients for which a first ESM alert was generated before the physicians had already initiated antibiotic therapy. They calculated they would “need to evaluate 8 patients to identify a single patient with eventual sepsis.” If they used an alternate protocol where the “evaluation” was to be re-done after every alert (not just the first one), then the number needed to evaluate jumped to 109.

The reporting of these statistics is telling. It acknowledges one trade-off, but still stops short of estimating the outcomes of interest. How costly is that evaluation? Is it just an instance of physician annoyance leading to some “alert fatigue,” or does it involve ordering costly tests? How many lives would be saved? Would there be side effects from the tests or the treatment? How expensive would the treatment be? Is 8 a reasonable number of “evaluations” to do? Is 109 reasonable?

Interestingly, the NNT statistics did not make it into the abstract. Instead, the less useful AUC statistic was elevated to the headline, perhaps because it seemed to the authors to be less tangled-up in protocols and therefore somehow more rigorous, objective and “scientific.”

Conclusions

Wong and colleagues’ stated conclusion was that medical professional organizations constructing national guidelines should be cognizant of the broad use of proprietary predictive models like the Epic ESM and make formal recommendations about their use. I strongly agree with that conclusion.

I would add a second conclusion of my own; Peer reviewers for professional journals such as JAMA Internal Medicine should insist that published evaluations of predictive models include:

- Documentation to clarify one or more specific protocols utilizing the model (and “usual care”),

- Estimates of all the relevant outcomes thought to be materially different across the protocols, and

- Documentation of the assumption values used in the model generating the outcomes estimates.

In other words, stop publishing predictive model “validations.” Instead, insist that investigators finish the job and publish decision analytic models comparing clinical protocols, some of which may incorporate predictive models.

8 thoughts on “U of Michigan study: Epic’s sepsis predictive model has “poor performance” due to low AUC, but reporting AUC is like building a bridge half way over a river. How to finish the job.”

Not having studied introductory epidemiology, I got lost on the ROC curve, so I couldn’t interpret this article. Thanks for drawing the curve and explaining it. What is your impression of the the ESM test? Missing two-thirds of sepsis cases despite generating alerts (ESM > 6) for 18% of hospitalized patients (2.7 X as many alerts as actual sepsis cases) doesn’t sound like a particularly useful test, whatever decision framework you are working from.

I agree that ESM is not superficially impressive. But, whether it is a good idea or not depends on how it is used. If the alert is nothing more than a nudge for the attending physician to remember to consider the possibility of sepsis, and one out of 8 nudges leads to getting an earlier jump on treatment and that occasionally saves a life, that sounds excellent. But if the medical-legal team makes the attending respond to every alert by doing some expensive workup that also increases length of stay, that happens 108 times just to get a short jump on treatment for one patient, that sounds bad. The key point is that you can only evaluate it in the context of the intended use and the associated impact on outcomes.

Another conclusion from the superficially unimpressive ESM performance is that it is difficult to get good predictive performance in models based only on “free” data already consistently lying around with good-enough data accuracy and completeness. Good predictive performance often requires WORK to capture the necessary inputs consistently and completely and accurately. The implication is that the starting focus should be on designing better protocols, and then we should look for the decision diamonds in those protocols where the physician makes a choice and sometimes it applies to a patient that will not ultimately benefit (or might even get harmed) from whatever is downstream from that diamond. Those are the places calling out for a predictive model. Then we can figure out what data can help us tell the difference between the patients that will not benefit (or get harmed) and the other patients. Then we can do the work of capturing that data. The step of doing regression or training a neural network is merely a handy way of automatically generating an algorithm that incorporates multiple predictors into a single useful score. Too many predictive modelers start by assuming it is infeasible to capture new data. If the decision is sufficiently consequential, then capturing the necessary data might be worthwhile. If it is not consequential, then maybe we’re working on supporting the wrong decision!

I could summarize my decades of experience on this general topic as “nothing in life is free” and “you get what you pay for” — despite what you read in the Powerpoint slides of analytics start-up entrepreneurs.

Hi Richard, This article really strikes a chord, and reinforces our strategy here at Humana to be a data-driven company. We develop predictive models (using state-of-the-art ML tools) for the businesses to use, and then validate via experiments that the interventions performed based on those predictions have the intended effect. Of course, this is a big undertaking across a large company like Humana, but I am happy to say our leaders are supporting our investments in building those systems. I do like your analogy of a half-built bridge. It is a feat of engineering, but doesn’t bring value to the community unless you connect it to the other side.

Steve, great to hear from you! Glad to hear you’re at Humana now, and I’m intrigued by the idea that Humana established a Dir of AI Engineering position and that Humana “gets” the idea of evaluating interventions based on predictions, rather than just validating algorithms. All the best!

EMAIL FROM: Singh, Karandeep

Aug 31, 2021 12:03 PM

(posted with permission)

Dear Dr. Ward,

Thank you for the thoughtful write-up.

I agree with you that the AUC, considered by itself, is not a sufficient measure to determine whether a model could be useful when linked to an intervention. Additionally, I agree that the AUC by itself is not sufficient to determine whether a model is good enough to use and sustain, which depends on follow-up work involving clinical trials linking a model to an intervention. The primary reason we focused on AUC in this paper is that we wanted to norm our results against what is being reported to Epic customers based on their implemented definition of sepsis. Epic’s sepsis definition differs from commonly used definitions in both research and quality improvement. In our paper, we tried to narrowly make the point that Epic’s selected sepsis definition inflates the AUC as compared to other standard definitions when used to judge their model against. The AUC is further exaggerated because Epic includes antibiotic orders as predictors within the model. As a result, the model’s predicted score goes up *after* clinicians have recognized it. If the model is to be used to improve time to antibiotics (as was done in the recent Critical Care Medicine paper by Yasir Tarabichi and colleagues), including antibiotic orders as predictors *and* also part of the outcome definition is problematic.

With regards to decision analytic thinking, we actually did include a decision curve analysis in our original draft, expressing the value of the model in the form a “net benefit.” This net benefit depends on the accepted range of trade-offs between false positives and true positives for a given clinical scenario and has been described in a series of papers by Andrew Vickers. While hard outcomes (e.g. mortality) cannot be calculated from silently running a model, net benefit can be calculated with silent scoring. We ended up needing to remove this because we were asked to shorten our paper from 3,000 to 1,500 words, and there was simply not enough bandwidth to introduce, report, and explain our findings. I did share the findings of the decision curve analysis with colleagues at Epic. We have subsequent data from actual clinical utilization of the sepsis model but because our transition to using the model clinically occurred in the midst of COVID-19 (which interplays with sepsis and associated definitions), we have not yet decided if we will publish on this.

I recognize our paper has been used by folks to criticize Epic, in some cases unfairly. I publicly addressed this in a Twitter thread shortly after the paper came out (https://twitter.com/kdpsinghlab/status/1407208969039396866?s=21). I’ve also been public about correcting inaccurate reporting on our paper (e.g. in the Verge) which made statements that were unfair to Epic.

We’ve also previously evaluated Epic models, having published 2 papers on the Epic deterioration index. In both of those papers, our evaluation numbers fairly closely matched what Epic was reporting internally to customers. The fact that our findings differ on sepsis have made it more of a focal point.

I do view the current level of transparency of industry-developed models, broadly speaking, as inadequate. This is not a criticism of Epic specifically but of this area more broadly. I don’t view this paper as a “our work is done” type of paper. However, I do think that healthcare’s reliance on industry for providing models *and* validating the models would benefit from independent checks on the claims. This, narrowly, is what we intended to do in our paper.

Appreciate your reaching out and for sharing your thoughtful piece.

All the best,

Karandeep

Karandeep Singh, MD, MMSc

Assistant Professor of Learning Health Sciences, Internal Medicine, Urology, and Information, University of Michigan

Chair, Michigan Medicine Clinical Intelligence Committee

Dear Dr. Singh,

Thank you for such a quick and thorough response.

I understand and agree with your point that the inclusion of antibiotic orders as predictors in the ESM is problematic and would inflate AUC. I also agree that the use of a non-standard definition of sepsis in Epic’s own evaluation of ESM performance would make their evaluation difficult to interpret and compare. I further agree that there is inadequate transparency in proprietary decision support algorithms, and that our health care system benefits from assessments by independent experts, as you and your team have contributed. Also, I appreciated that you and your colleagues were very fair to Epic and that you separated yourselves from the “hate on Epic” crowd. I tried to do the same in my blog post.

I am sorry to hear that the decision analysis work that you did was not prioritized for inclusion under the 1500 word limit. I for one would have been more than happy to read more words! I agree that Vickers’ concepts of “net benefit” and “decision curve analysis” are directly relevant to the point I was making about considering trade-offs, and I see that you did include some coverage of the trade-offs in the “number needed to evaluate” statistics included in the paper. My only objection to Vickers’ methods and the NNT-like statistics are that they seem to try to make the trade-offs appear to be simpler by expressing only a trade-off between one positive and one negative metric. Dealing with only two metrics and one “exchange rate” may reduce the amount of math involved, but in my opinion, most decisions include more than two relevant outcomes, particularly if one is willing to consider pain, function and economic outcomes as well as deaths. To use Vickers’ method or NNT, one generally must implicitly bundle multiple outcomes into just two metrics that serve as proxies for multiple unspecified downstream outcomes. To calculated net benefit using Vicker’s methods (as described at https://www.bmj.com/content/352/bmj.i6.long ), you would presumably have to determine the “exchange rate” by asking physicians “how many patients are you willing to evaluate to detect one previously-undetected case of sepsis X hours earlier?” You can skip over actually asking that question to physicians by just generating the “decision curve” using a range of hypothetical answers to that question (or a range of the associated threshold probabilities), but that just pushes off the question to the person interpreting the decision curve. The key point is that the analysis stops short of explaining one or more alternative protocols in which the predictive model is to be incorporated and it stops short of calculating the expected outcomes for those protocols. The reader is left to somehow do that math subjectively in their heads, which is why I consider it to go “almost across the river.”

You made the point that the determination about whether a model is good enough to use and sustain depends on follow-up work involving clinical trials linking a model to an intervention. I agree that actually measuring real-world outcomes in the context of a clinical trial is the ideal. But, creating a decision analytic model that “crosses the river” by providing calculations of all the expected outcomes using transparent logic and assumptions based on best available information would also be an important contribution. Not only would such an analysis inform physicians considering if and how to use the Epic Sepsis Model, but it might also help move our field beyond AUC-based “validations” and hopefully establish a precedent and an expectation for outcome-based decision analysis.

I’d be happy to discuss this further with you or your colleagues and would be happy to collaborate if you or others on your team decide to pursue the further research you have alluded to.

Best regards,

Rick Ward

EMAIL FROM: Singh, Karandeep

Aug 31, 2021 9:08 PM

(posted with permission)

Dr. Ward,

Feel free to post my email to your blog on my behalf — you have my permission. You’re welcome to share your reply there as well of course.

The primary challenge at looking at pain, function, mortality, and economic outcomes is that I don’t think these can be (accurately) estimated in a silent validation. In general, you need to link a model to an intervention to be able to estimate how much each of these measures changes with and without the model-based intervention. While the decision curve analysis represents a simplification, it can feasibly be calculated as long as the accepted range of risk tolerance can be solicited from domain experts (or patients, depending on the model’s use case). Its feasibility is attractive as a starting point. At the moment, I have limited bandwidth but at some point in the future, perhaps after the new year, I’d be happy to set up a virtual meeting for us to chat and exchange ideas.

Thanks again,

Karandeep

Karandeep Singh, MD, MMSc

Assistant Professor of Learning Health Sciences, Internal Medicine, Urology, and Information, University of Michigan

Chair, Michigan Medicine Clinical Intelligence Committee

Three years later, in 2024, the debate about Epic’s sepsis prediction model continues. But evaluations and discussions continue to be limited to basic predictive metrics (sensitivity, specificity, positive predictive value, negative predictive value, accuracy, and area under the ROC curve — NOT comparisons of the outcomes from alternative sepsis monitoring and treatment protocols. See https://www.beckershospitalreview.com/ehrs/accuracy-of-epics-sepsis-model-faces-scrutiny.html